13 Fragment Shaders

We learned that a WebGL program is made up of at least 3 programs: the host Javascript program, the vertex shaders, and the fragment shaders. In the past two chapters, we focused on the vertex shader and saw that it can be used to create complicated shapes in Chapter 11. We also learned that uniform variables can be used in the vertex shaders to create simple animations in the last chapter.

In this chapter, we turn our attention to the fragment shader. Recall from Chapter 7 that the fragment shader has two responsibilities: (1) deciding whether to discard a fragment or not, and (2) computing the color of the fragment if it is not discarded. We will see in this chapter that the fragment shader is very powerful because it determines colors that would finally appear on the screen.

As you might recall from Chapter 2, color is quite a complicated subject in its own right. A programmer must be aware of the color space of each color value they manipulate, and this is especially important when writing a fragment shader. Luckily, there are only two color spaces we need to worry about: linear and sRGB. In Program 1, we will discuss a function that converts from the linear space to the sRGB space and discuss why it is important. This function will be used throughout the rest of the book.

The mechanism that makes the fragment shader powerful is the varying variable, which is a mechanism that allows the vertex shader to pass information to the fragment shader. Varying variables allow use to create color gradients, which we will demonstrate in Program 2.

Moreover, varying variables allows the fragment shader to know about the fragment it is processing. One of the most useful information about the fragment is its position on the screen, and a way to set up the scene so that each fragmeht knows its position is to render a "full screen quad", which we will discuss in Program 3. The fragment position allows us to compute a color as a function of it, which is an image, and we shall see in Program 4 that this image can be very complicated even when our fragment shader is quite simple.

13.1 Program 1: Color spaces

13.1.1 Color spaces and the fragment shader

Recall from Section 2.3 that a color space specifies the mapping from the RGB color cube the actual color that appearas on the screen. The color space used by your web browser is the sRGB color space. So, in an HTML file, when you specify the color #EA8032, which translates to the RGB value of $(234, 128, 50)$ or approximately $(0.92, 0.50, 0.20)$ if we use floating point numbers, the fact that you see the orange color ■ is because the sRGB color spaces chooses to associate $(0.92, 0.50, 0.20)$ with this particular color. We also learn that the sRGB color space is not physical, meaning that a tristimulus value of $0.5$ is not half as powerful (i.e., as bright) as $1.0$. The color space where tristimulus values corresponds to light power emitted by the monitor is the linear color space. We also learns the rule of thumb that all intermediate calculations should be performed in the the linear space while all output values should be in the sRGB space.

How are the above facts and rule apply to how we should write fragment shaders? The first thing to keep in mind is that any color value that appears on the screen is automatically in the sRGB color space. This is simply because it is the color space that the browser uses. For all the previous programs up to this point, this output color is what we assign to the fragment shader's output variable, which is always called fragColor. So, this means that fragColor is always in the sRGB color space. (This will no longer apply when the fragment shader does not output to the screen, but we will talk about this later.)

Second, to follow the "compute in linear, output in sRGB" rule, we should follow another two simple rules when we write our programs.

- All color variables other than

fragColorare in the linear space. - We must convert from linear to sRGB when assigning a value to

fragColor.

Unfortunately, there are no automatic mechanisms such as a compiler that would enforce these rules for us. The program must instead enforce these rules by themself. Being mindful of colors spaces practically means convering colors from one space to another at the right places in your program.

13.1.2 Difference between the color spaces

The main point of Program 1 is to show the reader the visual difference between being mindful and not being mindful of color spaces. When you run the program, you will see what is depicted in the screenshot below.

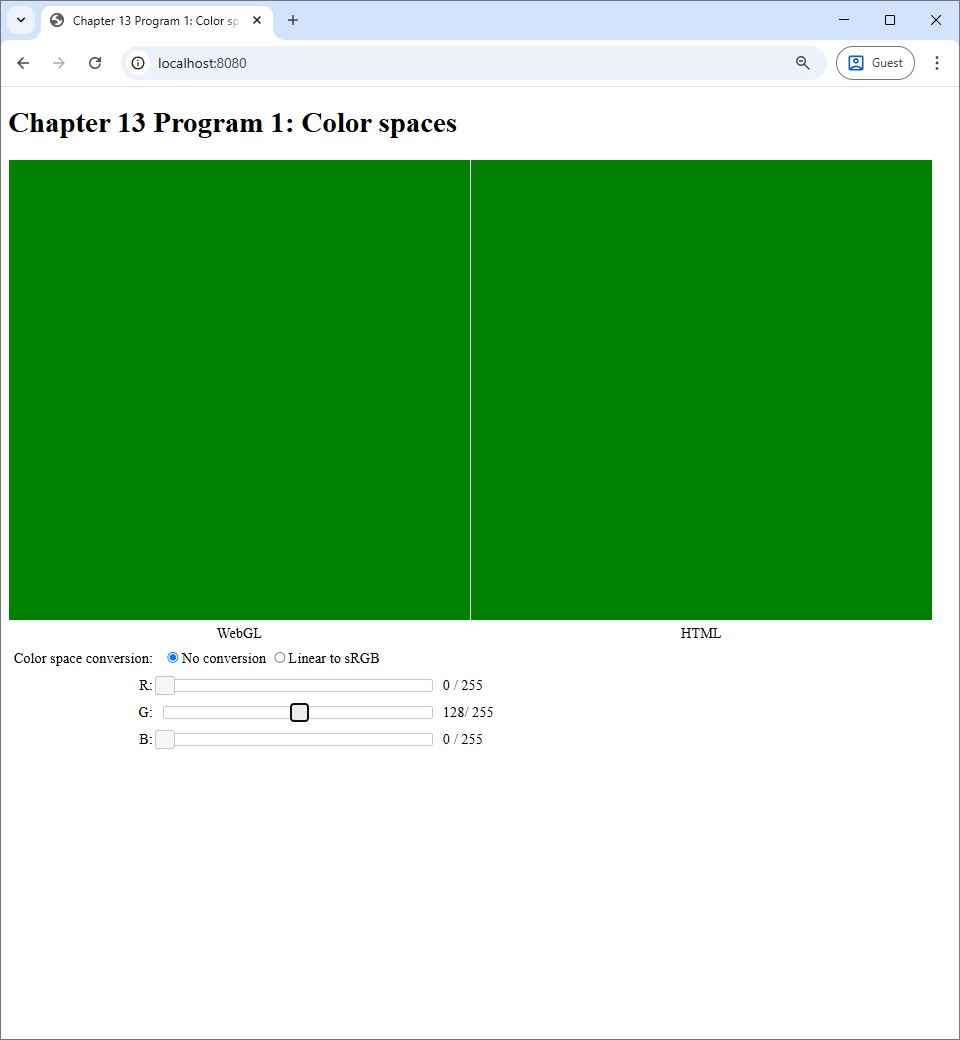

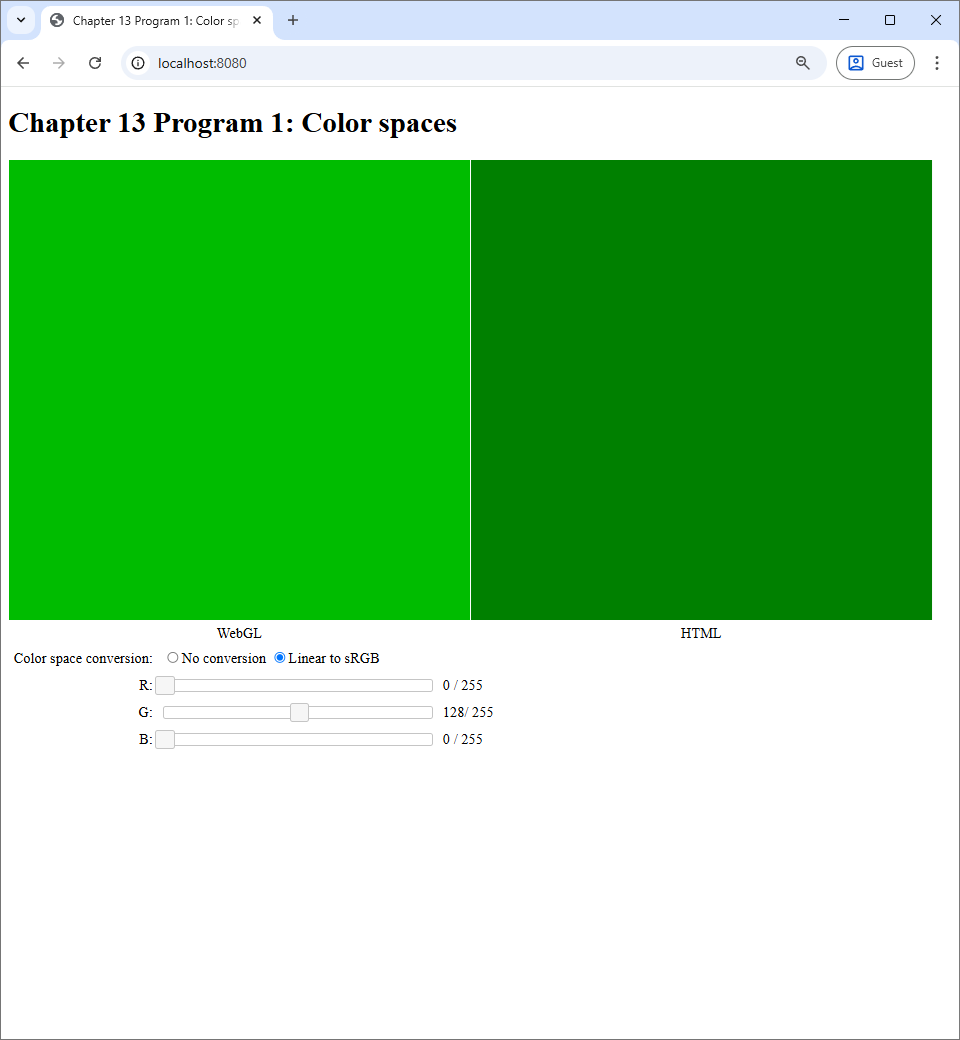

The program acts like a color picker you would see in a painting program like Microsoft Paint, GIMP, or Photoshop. There are three sliders, each corresponding to a tristimulous value in the RGB color space. There are two canvases, one labelled "WebGL" and the other "HTML," that we would show the colors specified by the sliders. The WebGL canvas displays the color through the use of a WebGL program, and the HTML canvas displays the color by manipulating the background-color CSS property of the canvas itself. So, the color shown on the HTML canvas reflects how the web browser normally interprets color values. Lastly, the user can control how the WebGL canvas would process the color specified by the sliders. When the user chooses the "No conversion" radio box, the WebGL canvas would output the color specified by the sliders without performing any calculation on it. However, when the user chooses the "Linear to sRGB" radio box, the WebGL program would treat the color specified by the sliders to be in the linear color space and perform conversion from linear to sRGB before outputting the color to the canvas. Note that the "Linear to sRGB" option is the one consistent with the rules we discussed in the last section, while the "No conversion" option is not.

To see what the options mean visually, let us manipulate the G slider so that the G value is $128 / 256 \approx 0.5$.

We can see that the both canvases show the exact same color. This makes sense because, as we said earlier, the web browser's color space is sRGB. So, if the WebGL program just outputs the color without any modification, it would show the exact same color that the browser would natively show. However, if we switch to the "Linear to sRGB" option, we will see that the color in the WebGL canvas becomes brighter.

This behavior is consistent with what we learned in Section 2.3: the same RGB values would appear brighter if it is in the linear color space than when it is in the sRGB color space. Let's take a look at the effect of the linear-to-sRGB conversion to other colors.

To repeat, the overall effect is that the linear-to-sRGB conversion results in brighter colors compared to directly outputting the color values directly. The exception to this behavior is when all the RGB values are either $0$ or $1$, in which case the converted color would be the same as the direct color. As a result, not performing the linear-to-sRGB conversion in the last step has a visible effect that WebGL renderings would appear dark.

The writer would like to repeat that the reason we need to put a linear-to-sRGB conversion at the last step of a fragment shader is not to make the rendering brighter. We do so because we want to make all color calculations up to that point to be in the linear space so that those calculations make sense according to physics. In this program, there is no calculation other than the conversion. However, the next program has color calculations despite us not writing them down explicitly in our code, so it is important to always stick to the rule even when our WebGL program seems to be very simple.

13.1.3 How the program works

We talked a lot about the linear-to-sRGB conversion and how important it is. Let us look at how it is implemented in code. The vertex shader is exceedingly simple: it just outputs the position fetched from the vertex buffer.

#version 300 es

in vec2 vert_position;

void main() {

gl_Position = vec4(vert_position, 0, 1);

}The fragment shader receives two pieces of information from the hose program though uniform variables. The first piece is color specified by the sliders, represented by the color variable of type vec3. The second piece is the whether the aforementioned color is in the linear color space, represented by the useLinearColorSpace variable of type bool. As usual, the fragment shader outputs a vec4, which we always call fragColor.

#version 300 es

precision highp float;

uniform vec3 color;

uniform bool useLinearColorSpace;

out vec4 fragColor;Let us look at the host program and see how the uniform variables are set.

let r = this.redSlider.slider("value") / 255.0;

let g = this.greenSlider.slider("value") / 255.0;

let b = this.blueSlider.slider("value") / 255.0

let useLinearColorSpace =

$('input[name=colorSpace]:checked').val() === 'linear';

useProgram(this.gl, this.program, () => {

let colorLocation = self.gl.getUniformLocation(self.program, "color");

self.gl.uniform3f(colorLocation, r, g, b);

let useLinearColorSpaceLocation = self.gl.getUniformLocation(

self.program, "useLinearColorSpace");

self.gl.uniform1i(useLinearColorSpaceLocation, useLinearColorSpace);

setupVertexAttribute(

self.gl, self.program, "vert_position", self.vertexBuffer, 2, 8, 0);

drawElements(self.gl, self.indexBuffer, self.gl.TRIANGLES, 6, 0);

});

We can see that the program fetches the tristimulous value r, g, and b from the sliders and convert them to floating point values in the $[0,1]$ inverval by dividing the fetched values with $255$, the maximum value of the sliders. Then, it uses the uniform3f matrix of the WebGL context to set the color uniform variable. For the useLinearColorSpace variable, the value can be obtained by examining whether the checked radio box is the "linear" one. Now, both in Javascript and in GLSL, the useLinearColorSpace are of the boolean types. However, when we set uniform variable, we have to use the uniform1i method, whose name indicates that it is for integer variables, as a substitute because the WebGL API does not have method specifically designed for the boolean type.

The main function of the fragment shader looks at useLinearColorSpace and apply the linear-to-sRGB conversion if the variable is true.

void main() {

if (useLinearColorSpace) {

fragColor = vec4(linearToSrgb(color), 1.0);

} else {

fragColor = vec4(color, 1.0);

}

}The linearToSrgb function converts a vec3 that is meant to represent an RGB value in the linear color space to an RGB value in the sRGB value in color space.

vec3 linearToSrgb(vec3 color) {

return vec3(

linearToSrgbSingle(color.r),

linearToSrgbSingle(color.g),

linearToSrgbSingle(color.b));

}The function applies the same transformation, implemented by the linearToSrgbSingle function to the R, G, and B channels. The linearToSrgbSingle function implements the function

$$

f_{\mathrm{linear}\rightarrow\mathrm{sRGB}}(x) = \begin{cases}

12.92 x, & x \leq 0.0031308 \\

1.055x^{1.0/2.4} - 0.055, & x > 0.0031308

\end{cases}

$$

that we previously discussed in Section 2.3.3.

float linearToSrgbSingle(float c) {

float a = 0.055;

if (c <= 0.0)

return 0.0;

else if (c < 0.0031308) {

return 12.92*c;

} else {

if (c >= 1.0)

return 1.0;

else

return (1.0+a)*pow(c, 1.0/2.4)-a;

}

}From now on, we will always apply linearToSrgb function as the final steps of our fragment shaders. To repeat, we are doing this in order to comply with the rule we set up for ourselves that (1) the final output must be in the sRGB color space, and (2) all intermediate computations should be carried out in the linear color space.

13.2 Program 2: Using varying variables to create color gradients

In this section, we discuss a mechanism that makes the fragment shader extremely poweful, the varying variables. In the programs we have discussed so far, primitives always have a single color. However, with a varying variable, we can render primives whose individual pixels might have different colors, and we will use it to create color gradients that can be seen the following screenshot.

13.2.1 Varying variables

Varying variables are a mechanism for passing information from the vertex shader to the fragment shader. For a piece of information to be passed, we must declare two variables of the same name and the same type: one in the vertex shader, and the other in the fragment shaders. For example, in Program 2, the information we want to pass from the vertex shader to the fragment shader is a vec3 that we use to represent an RGB color value. So, in the vertex shader , we declare a global variable called geom_color as follows.

out vec3 geom_color;Then, in the fragment shader, we declare another variable with the same type and the same name.

in vec3 geom_color;The only difference is that, in the vertex shader, we prefix the declaration with the keyword out, and, in the fragment shader, we instead use the keyword in. The keywords make sense because information always flows from the vertex shader to the fragment shader, so we can think of a varying variable as being an "output" of the vertex shader and also an "input" of the fragment shader. It is the job the vertex shader to assign values to varying variables and the fragment shader to read and process them.

Let us discuss the semantics of varying variables. That is, after the vertex shader assign their values, what values do we expect to see in the fragment shaders when they read the same varying variables? The answer is that it is almost always not the same values that were assigned to by the vertex shader!

To understand how varying variables work, we need to revisit how the graphics pipeline work. Recall from Chapter 7 that the graphics pipeline has multiple stages. The vertex shader controls a step called "vertex processing." This step is followed by two more steps: "primitive assembly" and "rasterization." The fragment shader controls "fragment processing," which is the next step in the process.

When the host program renders a primitive, say with the drawElements method of the WebGL context, it must specify a number of vertices in the vertex buffer. For each of these vertices, WebGL invokes the vertex shader to process each vertex individually. So, if the vertex shader assigns a value to a varying variable, then there would be an independent value associated with each vertex, and values of different vertices can be different. In case of Program 2, geom_color and be red for one vertex, and it can be green for another.

geom_color associated with different vertices.Then, in primitive assembly, vertices belonging to the same primitives are connected, and primitives are simplified. In the end, we are left with points, lines, and triangles such that each individual vertex has a geom_color value associated with it.

In rasterization, each primitive is turned into a number of fragments. The value of the varying variable of a fragment is an interpolation of the the values at the vertices that make up the primitive. The type of interpolation used depends on the primitive type.

- If the primitive is a point, then the value is copied directly from the vertex.

- If the primitive is a line, then the value is a linear interpolation between the values at the endpoints of the line.

- If the primitive is a triangle, then the value is an interpolation computed using the barycentric coordinates of the fragment position.

Lastly, in fragment processing, WebGL invokes the fragment shader for each of the generated fragments, and the fragment shader sees the value that was interpolated by the rasterization process.

We can now see why varying variables makes the fragment shader powerful. With a varying variable, a fragment shader sees a different value at each vertex, which means that it can now assign different colors to different pixels in a controlled manner. This capability allows us to create more complicated images than what we have been achieved before.

13.2.2 Color gradients

Now that we understand how varying variables work, it is time to see it in action. The color gradients that we see in Figure 13.5 is a direct result of rendering a mesh where each vertex has an extra attribute, color, which is represented by the vert_color attribute variable. In the host program createBuffers method, we set up the mesh as follows.

createBuffers() {

let vertexData = [

-0.5, -0.5, // First vertex

1.0, 1.0, 0.0, // is yellow.

0.5, -0.5, // Second vertex

0.0, 1.0, 1.0, // is cyan.

0.5, 0.5, // Third vertex

1.0, 0.0, 1.0, // is magenta.

-0.5, 0.5, // Fourth vertex

1.0, 1.0, 1.0 // is white.

];

this.vertexBuffer = createVertexBuffer(

this.gl, new Float32Array(vertexData));

let indexData = [

0, 1, 2,

0, 2, 3

];

this.indexBuffer = createIndexBuffer(

this.gl, new Int32Array(indexData));

}The mesh has four vertices where each has it own color, and the mesh is made up of two triangles, forming a square. In the vertex shader, we assign the attribute to the geom_color varying variable, which basically tells WebGL to interpolate the vertex colors during rasterization.

#version 300 es

in vec2 vert_position;

in vec3 vert_color;

out vec3 geom_color;

void main() {

gl_Position = vec4(vert_position, 0, 1);

geom_color = vert_color;

}The fragment shader, however, is a little more complicated. The interpolated color is available to the fragment shader via the geom_color varying variable. It is tempting to just set the output color to this value. Nevertheless, we have to remember the rule we discussed in the last section: we must apply linear-to-sRGB conversion as the last step in our fragment shader. This is exactly what is done in the main function of the fragment shader.

in geom_color;

void main() {

fragColor = vec4(linearToSrgb(geom_color), 1.0);

}The linearToSrgb function is the same one we discussed in the last section. To make the whole program work, we must set up the attributes and render the mesh in the host program, and the code that does this is as follows.

useProgram(this.gl, this.program, () => {

setupVertexAttribute(

self.gl, self.program, "vert_position", self.vertexBuffer, 2, 4*5, 0);

setupVertexAttribute(

self.gl, self.program, "vert_color", self.vertexBuffer, 3, 4*5, 4*2)

drawElements(self.gl, self.indexBuffer, self.gl.TRIANGLES, 6, 0);

});The reader might recall that we apply the linear-to-sRGB conversion because we recognize that we want the output color to be in the sRGB space while we want all intermediate calculations to be in the linear space. Paradoxically, the code of Program 2 does not specify any color calculations at all. All it does is copying color values from one variable to another. There are no additions, subtractions, or multiplications of color variables in the code! However, although we do not specify any calculation explicitly, there are implicit calculations involving colors: the color interpolation carried out by the graphics pipeline in the rasterization step. We want all color interpolations conducted in the linear space. So, to recognize this fact, we apply linear-to-sRGB conversion before we set the output of the fragment shader.

Not applying the linear-to-sRGB conversion at the end can result in a very different color gradient, which is shown in Figure 13.9. We emphasize that Figure 13.9(a) is RIGHT, and Figure 13.9(b) is WRONG. A competent graphics programmer should not allow Figure 13.9(b) to happen. The reason is that interpolation carried out by the graphics pipeline is linear in nature, and this calculation must be carried out in the linear color space in order for it to make any mathematical sense. Not applying the linear-to-sRGB in the last step means that the colors being interpolated by the graphics pipeline are in the sRGB color space. This is not mathematically sound and is therefore wrong. It is thus important to always apply linear-to-sRGB at the last step even though the code does not directly specify any calculations on color values.

|

|

| (a) | (b) |

13.3 Program 3: The full-screen quad

Through the last program, we learned that the fragment shader can generate color gradients through the use of a varying varying variables. This is, of course, not the only thing that the fragment shader and the varying variables are capable of. In fact, the fragment shader can generate arbitrary images that we can encode in a GLSL program. To get a sense of what is possible, take a look at Shadertoy, which is a web sita that hosts GLSL programs that can generate impressive images with minimal access to data.

While we will not generate images and animations like those in Shadertoy just yet in this chapter, we will generate some complicated ones with Program 4. To get Program 4 to work, though, we need to study a tool that it relies on: the full-screen quad (FSQ). Creating a full-screen quad is actually even easier than creating the color gradients in Program 2. Nevertheless, although the FSQ itself is a simple object, it can be used to create very complicated images as we shall see later.

What is an FSQ then? As the name implies, it is simply a quad(riliteral) whose area is the area of the whole screen. The clip-space coordinates of the four corners of the screen are $(-1,1,0,1)$, $(-1,1,0,1)$, $(1,1,0,1)$, and $(-1,1,0,1)$. So, an FSQ is a quad whose four corners are these four vertices, making it a rectangle. Note that we can already render such a shape using what we know before this chapter. However, the FSQ we are introducing has an extra feature: when we render it, we interpolate the verticec' 2D positions and pass these positions to the fragment shader through a varying variable. The quad with interpolated positions is much more powerful than the one without.

It is very simple to set up an FSQ. We just need to create a vertex buffer with four vertices where each vertex has just a single attribute, its 2D position, and then connect the vertices to form a rectangle. This is done in the index.js file of Program 3 as follows.

createBuffers() {

let vertexData = [

-1.0, -1.0,

1.0, -1.0,

1.0, 1.0,

-1.0, 1.0

];

this.vertexBuffer = createVertexBuffer(this.gl, new Float32Array(vertexData));

let indexData = [

0, 1, 2,

0, 2, 3

];

this.indexBuffer = createIndexBuffer(this.gl, new Int32Array(indexData));

}

In the vertex shader, we make the clip position from the 2D position attribute, and we also assign the attribute to a varying variable.

in vec2 vert_position;

out vec2 geom_position;

void main() {

gl_Position = vec4(vert_position, 0, 1);

geom_position = vert_position;

}Of course, in the host program, we need to configure the attribute accordingly, and this is done in the updateGL method as follows.

updateWebGL() {

let self = this;

this.gl.clearColor(0.0, 0.0, 0.0, 0.0);

this.gl.clear(this.gl.COLOR_BUFFER_BIT);

useProgram(this.gl, this.program, () => {

setupVertexAttribute(

self.gl, self.program,

"vert_position", self.vertexBuffer, 2, 8, 0);

drawElements(self.gl, self.indexBuffer, self.gl.TRIANGLES, 6, 0);

});

window.requestAnimationFrame(() => self.updateWebGL());

}

The fragment shader now has access to a varying variable called geom_position that is the interpolation of the four vertices of the four corners. This inpolated positions are precisely the clip-space coordinates of the fragment's position on the screen. The fragment shader becomes aware of the fragment it is processing, and it can use this information to choose a color to output based on this information. This is where all the magic happens, and we will showcase what we can do with it in the next section.

However, to make this section self-contained, we will simply visualize the screen position as colors. We will use the R channel to represent the $x$-position, and the G channel to represent the $y$-position. Because the positions are in the range $[-1,1]$, we need to transform them to the $[0,1]$ range before outputing. Of course, we shall apply linear-to-sRGB conversion in the end so as to comply with the rule we set up for ourselves in the last section. The main function of the fragment shader is reproduced below.

in vec2 geom_position;

out vec4 fragColor;

void main() {

vec3 color = vec3((geom_position + 0.5) + 0.5, 0.0);

fragColor = vec4(linearToSrgb(color), 1.0);

}After all is said and done, we end up with another color gradient that is depicted in the figure below.

It may seem that we have not accomplished anything new as we just got another color gradient with even fewer colors than what we saw in Program 2. But stay tuned! We will see how to take full advantage of the FSQ in the next program

13.4 Program 4: Drawing a fractal

Program 4 displays an image of a fractal called the Julia set. The sliders allows the user to move the image around and change the zoom level. Two screenshots of the program are shown in the figure below.

The image that Program 4 show are much more complicated and psychedelic than the simple images that we have seen so far in this chapter. However, Program 4 is just a fancier version of Program 3. The mesh rendered and the vertex shader are exactly the same. The biggest difference is the fragment shader. Instead of just directly outputing the geom_position varying variable as color, Program 4's fragment shader converts the 2D position into a color that varies rapidly as the position changes. Let us see how it is done.

13.4.1 Julia set

A Julia set is a shape we call a "fractal." Intuitively, it is a shape where, when you zoom in on a part, you see structures that are similar to the overall structure. For our purpose, it is just a shape with complex details that is interesting to draw. A Julia set fits our goal well because it is a fractal that is easy to describe and program. So, let us start with the definition.

The definition of the Julia set is based on a process conducted on a complex number. We are given two complex numbers $c$ and $p$. We are then to construct an infinite sequence of complex numbers $z_1, z_2, \dotsc$ according to the following equation. \begin{align*} z_n = \begin{cases} p, & n = 0 \\ z_{n-1}^2 + c, & n > 0 \end{cases}. \end{align*} For example, if $c = 1 + i$ and $p = 0$, then, \begin{align*} z_0 &= p = 0, \\ z_1 &= 0^2 + c = 1 + i, \\ z_2 &= z_1^2 + c = (1 + i)^2 + (1 + i) = 2i + (1 + i) = 1 + 3i, \\ z_3 &= z_2^2 + c = (1 + 3i)^2 + (1 + i) = (-7 + 6i) + (1 + i) = 6 + 7i \\ z_4 &= z_3^2 + c = (6 + 7i)^2 + (1 + i) = (-13 + 84i) + (1 + i) = -12 + 85i. \end{align*} We can continue this process and compute $z_5$, $z_6$, and so on indefinitely. For complex number $c$ and a positive real number $R$, the Julia set with parameter $c$ and $R$ is the set of complex numbers $p$ such that the infinite sequence $z_1$, $z_2$, $z_3$, $\dotsc$ ($z_0$ is not included) defined above has the properties that the absolute value of each $z_n$ is always less than $R$. Symbolically, \begin{align*} \mbox{Julia set with parameter $c$ and $R$} = \{ p : |z_n| < R \mbox{ for all } n = 1,2,3,\dotsc \} \end{align*} Recall that, for a complex number $z = x + yi$, the absolute value of $z$, denoted by $|z|$, is a real number defined as: \begin{align*} |z| = \sqrt{x^2 + y^2}. \end{align*}

From the definition above, it seems that it would be impossible to compute a Julia set because we have to predict everything that would happen in a process that never finishes. So, when we draw a Julia set in practice, we settle on a simpler process that terminates in finite time. To do this, we pick an integer $N$ to serve as an upper bound on the number of calculation steps. That is, we start with $z_0 = p$, and we compute $z_1$, $z_2$, and so on until we have computed $z_N$. Then, we check whether each of the $z_1$, $z_2$, $\dotsc$, $z_N$ has magnitude large than $R$ or not. If one is larger, then we say $p$ is not in the Julia set. If none is larger, then we say that $p$ is in the Julia set.

13.4.2 Visualizing the Julia set

Now that we know what a Julia set is, how do we go about creating a picture from it? Suppose that we have fixed $c$, $R$, and $N$. We can look at the image plane as the set of 2D points where each point can serve as the initial value $p$ in the process above. We can then run the process and see if $p$ is in the Julia set or not. If $p$ is in the set, we can assign the point $p$ with a color (say, white), and, if not, we can assign to it another color (say, black). However, this will lead to a monochrome picture that might not be as interesting as what we saw in Figure 13.11. The trick is to visualize the first number $n$ where the $|z_n| \geq R$. This is basically what we done in the fragment shader, whose main function is reproduced below:

in vec2 geom_position;

uniform vec2 center;

uniform float scale;

out vec4 fragColor;

void main() {

vec2 p = (geom_position - center) * scale;

vec2 c = vec2(-0.4, 0.6);

float R = 2.0;

int N = 100;

int n = julaNumIterations(p, R, c, N);

vec3 color = scalarToColor(float(n) / float(N));

fragColor = vec4(linearToSrgb(color), 1.0);

}Here, geom_position is the varying variable that stores the on-screen position of the fragment being process, and we get it from the full-screen quad as we did in Program 3. The center and scale uniforms variables are taken from the sliders in the web page, and they are used to computed the position $p$ that is to be processed further. These variables allows the generated image to be moved around and zoomed in and out. Notice that we use vec2, the 2D vector type, to represent a complex number. It is convenient because a complex number also have two real component. One can also see that the parameters of the Julia set are $c = -0.4 + 0.6i$, $R = 2$, and $N = 100$.

We noted earlier that, to get a more interesitng image, we shall visualize the number $n$, which is the smallest integer where $|z_n| \geq R$. The function juliaNumIterations computes just that, and we will discuss it later. Once we have $n$, our next task is to convert it to a color, and this what scalarToColor does. To make it easier to code the function, we send the fraction $n/N$ to it instead of the number $n$. In this way, the function does not have to know about $N$ at all. Lastly, we convert the color returned by the function to the sRGB color space in order to comply with the color space rule we have been using since Program 2.

Let us now deep dive to the functions used by the main function. The first is the juliaNumIterations function, which is reproduced below.

int julaNumIterations(vec2 p, float R, vec2 c, int N) {

vec2 z = p;

int n = 0;

for (int i = 0; i < N; i++) {

z = square(z) + c;

n = n+1;

if (dot(z, z) >= R*R) {

break;

}

}

return n;

}

We see that another advantage of using a vec2 to represent a complex number is that we can perform operations such as addition and subtraction with the built-in GLSL operators. However, we cannot compute the square of z with z*z because multiplying two complex numbers together work differently from the way GLSL muliplies two vec2 together. Instead, we created a function square to do the squaring.

vec2 square(vec2 z) {

return vec2(z.x * z.x - z.y * z.y, 2.0 * z.x * z.y);

}The function follows the formula: \begin{align*} (x + yi)^2 = (x^2 - y^2) + (2xy) i. \end{align*}

Notice also that we check whether $|z| \geq R$ with the condition:

dot(z, z) >= R*RAs discussed in Chapter 10, dot is a built-in GLSL function that computes the dot product between the two input vectors. So, dot(z, z) is equal to z.x * z.x + z.y * z.y, which is equal to $|z|^2$ if we regard $z$ as a complex number. We check whether dot(z, z) is greater than or equal to R * R because it is faster than taking the square root and then checking whether the result is greater than or equal to R or not.

Now that we have the number of iterations $n$ from julaNumIterations, we need to turn this number into a color. This is the job of the scalarToColor function. It takes a floating point number from the interval $[0,1]$, computed by dividing $n$ with the maximum iteration number $N$, and returns a color corresponding to the ratio. The code of the function is reproduced below.

const vec3 red = vec3(1.0, 0.0, 0.0);

const vec3 yellow = vec3(1.0, 1.0, 0.0);

const vec3 green = vec3(0.0, 1.0, 0.0);

const vec3 cyan = vec3(0.0, 1.0, 1.0);

const vec3 blue = vec3(0.0, 0.0, 1.0);

const vec3 purple = vec3(0.5, 0.0, 1.0);

const vec3 magenta = vec3(1.0, 0.0, 1.0);

vec3 scalarToColor(float x) {

if (x < 1.0 / 6.0) {

float alpha = x * 6.0;

return mix(red, yellow, alpha);

} else if (x < 2.0 / 6.0) {

float alpha = (x - 1.0 / 6.0) * 6.0;

return mix(yellow, green, alpha);

} else if (x < 3.0 / 6.0) {

float alpha = (x - 2.0 / 6.0) * 6.0;

return mix(green, cyan, alpha);

} else if (x < 4.0 / 6.0) {

float alpha = (x - 3.0 / 6.0) * 6.0;

return mix(cyan, blue, alpha);

} else if (x < 5.0 / 6.0) {

float alpha = (x - 4.0 / 6.0) * 6.0;

return mix(blue, purple, alpha);

} else {

float alpha = (x - 5.0 / 6.0) * 6.0;

return mix(purple, magenta, alpha);

}

}

The code is quite long, but it operates on a simple principle. We pick seven colors—red, yellow, green, cyan, blue, purple, and magenta—and associated them with the ratio values (stored in the variable x) of $0/6$, $1/6$, $2/6$, $3/6$, $4/6$, $5/6$, and $6/6$, respectively. These seven values partition the $[0,1]$ interval into 6 subintervals: $[0/6,1/6)$, $[1/6,2/6)$, and so on until $[5/6, 6/6]$. For $x$ in each of these intervals, we simply linearly interpolated between the colors associated with their endpoints. Linear interpolation is done with the mix build-in function of the GLSL language. The function signature is

mix(a, b, alpha)

and it computes (1.0 - alpha) * a + alpha * b. This built-in function is very useful, and we will use it in future programs.

After we have computed a color from the number of iterations $n$, we need to convert this color from the linear color space to the sRGB color space using the linearToSrgb function, which we discussed in the last program.

13.4.3 The rest of the program

The fragment shader, which we just finished discussing the last section, is obviously the most complicated part of Program 4. The host program and the vertex shader are much simpler in comparison. In order for the fragment shader to carry out its magic, the host program needs to render a full-screen quad and pass user input from the sliders to the GLSL program. The construction of the full-screen quad is the same as that in Program 3, and we shall not repeat that part here. The main difference is just how the program sets up the uniform variables, and the Javascript code around that part is shown below.

let centerX = this.centerXSlider.slider("value") / 1000.0;

let centerY = this.centerYSlider.slider("value") / 1000.0;

let scale = Math.pow(2, -this.scaleSlider.slider("value") / 100.0);

useProgram(this.gl, this.program, () => {

let centerLocation = self.gl.getUniformLocation(self.program, "center");

self.gl.uniform2f(centerLocation, centerX, centerY);

let scaleLocation = self.gl.getUniformLocation(self.program, "scale");

self.gl.uniform1f(scaleLocation, scale);

setupVertexAttribute(

self.gl, self.program, "vert_position", self.vertexBuffer, 2, 8, 0);

drawElements(self.gl, self.indexBuffer, self.gl.TRIANGLES, 6, 0);

});We see that the code takes the centerX and centerY values from the corresponding sliders, and it sets the uniform variable center, which is defined in the fragment shader, to those values. It also takes the scale value from another slider and set it to the scale uniform variable.

The vertex shader is exactly the same as that of Program 4. Again, it is responsible for setting up the geom_position varying variable so that the fragment shader has access to the screen position of each fragment it processes. Because the code is exactly the same, we shall not repeat it here.

All in all, we see that we can generate a very complex image by coding the image generation logic in the fragment shader and using the full-screen quad and a simple vertex shader to give the fragment shader access to the position of each fragment.

13.5 Summary

- Fragment shaders are responsible for computing the color of each fragment. Therefore, it largely determines what colors the user see.

- WebGL programs outputs colors to the framebuffer, and the web browser assumes that these color values are in the sRGB color space. This is the color space that web browser uses by default: colors in HTML and CSS are in this space.

- However, because WebGL programs will inevitably do calculations on the colors, we make take great care to make sure that the colors inside GLSL code are in the linear color space. This means that, before we set the final output of our fragment shader, we must convert all color values from the linear to the sRGB color space. This is done by the

linearToSrgbfunction, which we discussed in Program 1. - Fragment shaders have access to uniform variables like vertex shaders. However, it can also receive information from the vertex shaders in the form of

varying variables. - Values of variables inside a fragment shaders are interpolations of values set by the vertex shader. This makes it possible for the fragment shader to output different colors for different fragments. For example, Program 2 shows how a varying variable can be used to create color gradients.

- An important tool for creating complex images with WebGL program is the full-screen quad, which is basically a rectangle that covers the whole screen. Program 3 shows how to set up one.

- The full-screen quad allows the fragment shader to know the position of the fragment being processed. Program 4 shows how this information can be used to create a complex image like that of a fractal.